By: Amy C. Weston, Privacy Attorney

After much negotiation, in late 2023, lawmakers in the EU reached political consensus on the contents of the much-hyped Artificial Intelligence Act (AI Act), the first comprehensive AI law we have seen so far. The proposed law must first undergo final review before formal approval, which will likely occur in late spring 2024. Further, if adopted, there would be a grace period of two to three years to allow companies to prepare before the AI Act would be enforceable.

The law in its current form applies across all sectors in Europe, with the goal of regulating all AI systems, which are broadly defined to include nearly all forms of machine learning, logic and knowledge-based computer systems, and statistical decision-making approaches. The term includes standalone AI systems and AI-driven components integrated into otherwise non-AI products.

Who is Subject to the Act?

The law will apply to providers, users, importers, and distributors. The law’s jurisdictional reach is broad – it will apply to US-based companies providing services or selling products in the EU, as well as to any user or provider based outside the EU if the AI system or any output generated by the AI system is used in the EU. This means that the AI Act imposes even more obligations on US companies than the GDPR. The GDPR distinguishes between controllers – those with direct control over the data being collected – and processors – those tasked with carrying out certain limited processing of the collected data. The AI Act imposes a higher burden on controllers, whereas most US companies operating under GDPR are classified as processors. Under the AI Act, even processors are subject to a wide range of direct obligations.

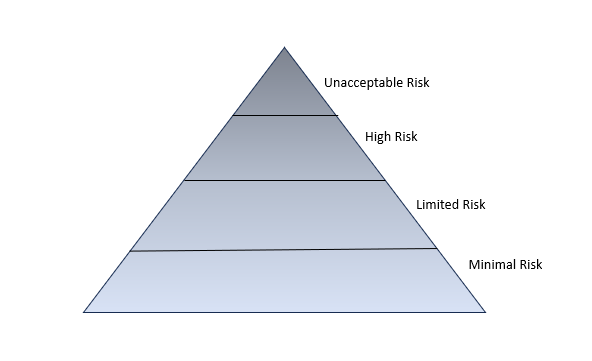

Risk-Based Tiers of AI

So, what does the law cover, and how? The AI Act follows a risk-based approach. Readers have likely already seen the below pyramid graphic, which demonstrates how the AI Act categorizes different types of artificial intelligence into four buckets of risk, subject to a few important out-of-scope systems:

- At the top of the pyramid are the Unacceptable Risk AI systems, deemed by the law to pose an unacceptable level of risk no matter what precautions are in place. These are not permitted under the law. Examples include biometric identification systems, such as real-time facial recognition software used in public spaces, and social scoring systems, along with systems that provide cognitive behavioral manipulation of persons or vulnerable groups of persons. Specific examples include toys using voice assistance encouraging dangerous behavior in minors, and emotion recognition AI used at work or schools, or that categorize people to infer sensitive data such as sexual orientation or religious beliefs.

- Next on the pyramid are the High-Risk AI Systems, subject to the bulk of the law’s oversight and regulation. These are the systems (or products that incorporate systems) that the law deems have a high health or safety risk. Specific examples include assessing an individual’s jobworthiness, creditworthiness, access to higher education, authenticity of proof of identity for purposes of migration or travel, or administration of justice. As long as the AI poses a significant risk of harm to the health, safety or fundamental rights of individuals, then it falls under this category. Keep reading for a deeper dive into what the regulation of AI that falls within this system entails.

- The third category is Limited Risk AI Systems, such as chatbots, deep fakes and emotion recognition software. These are permitted with some oversight and controls, the key of which is transparency.

- Finally, we have Minimal Risk AI Systems, such as video games and spam filters. Because of the low risk these are deemed to have by the law, they will not be subject to regulation.

Systems used for military, defense, or national security are deemed out-of-scope of the AI Act and are not subject to this classification, nor are systems used by public authorities in non-EU countries with an international agreement for judicial cooperation and law enforcement. Systems used solely for research and development are likewise exempt from the classification system, as are uses of AI systems by natural persons for purely personal activities.

High-Risk AI Systems – Product or Purpose Test

Those systems deemed High Risk are categorized further based on whether the AI system is a “product” or whether it will be used for a specific “purpose.” Product-based AI systems include certain machinery, radio equipment, PPE, medical devices, toys, elevators, motor vehicles, marine equipment, and train and air transportation. These types of product-based AI systems will be required to undergo a third-party conformity assessment prior to the product’s release in the EU market.

By contrast, purpose-based AI systems include any system used for one of the following purposes: biometric identification, critical infrastructure, educational admissions, assessing creditworthiness, employment and recruiting, and uses in law enforcement, border control or the administration of justice. In this category especially, the focus is the AI system’s proposed use case.

Providers (and in some cases, users) of these systems will be required to implement transparency regimes, informing users that they are interacting with an AI system and telling them whether the AI can generate deep fakes. The AI will also need to include instructions for use, and include transparent disclosure of the AI system’s characteristics, capabilities and limitations. Providers of high-risk AI systems must complete a fundamental rights impact assessment to analyze the risk to individuals before releasing the AI system. Other governance requirements include:

- creating risk management systems and maintaining quality controls,

- compliance with quality controls around training, valuation and testing of datasets,

- providing technical documentation,

- conducting (or, for products-based systems, having a third party conduct) conformity assessments,

- maintenance of automatically-generated logs,

- providing comprehensible and accessible information to users,

- implementing appropriate and effective human oversight,

- ensuring suitable accuracy, robustness, and cybersecurity,

- taking corrective action in the event of non-compliance or malfunction.

What About Generative AI?

Interestingly, the law does not expressly address generative AI, which did not exist when the AI Act was first introduced in 2021. Regulators are discussing whether to adopt separate obligations for general purpose AI systems, foundation models such as GPT4, and generative AI, that are used to create new content. For example, the latest text of the law, which has not yet been released to the public, will include a new requirement that content creators include an “Opt-Out” button for users to opt out of feeding their data back into the AI system.

How Should a US Company Prepare?

- Determine whether the AI Act is likely to apply to you or your users. If so, determine whether you are likely to be considered a provider of a High-Risk AI system. From there, leadership should identify the obligations of the AI Act which would require changes to a company’s products or services and prepare a project plan to bring implementation of such changes once the law is adopted. Whether a company is already subject to GDPR will be a guideline in this process, so it is important for companies to understand current GDPR applicability.

- Identify and document the risks posed by the AI, assess how to mitigate this risk and implement appropriate safeguards, such as revising datasets used to train the AI system. Companies should anticipate and be prepared to adopt requirements around risk assessments and data governance controls.

- Develop policies and procedures around your AI systems. In some cases, companies may be able to leverage GDPR compliance documents, such as data protection impact assessments, security policies and incident responses, to document AI Act compliance efforts.

Penalties for non-compliance are significant: up to €35m or up to 7% of a company’s global annual turnover, whichever is higher. Contrast this with the GDPR’s penalty of €2m or 4% of global annual turnover, and one sees that the EU authorities are serious about leading the charge in the global race to govern AI. The AI Act, like GDPR, will be enforced at the national and provincial level as well as by a yet-to-be-formed pan-EU AI Office, to be advised by an AI board composed of EU countries’ representatives.

It has been five years since GDPR shook the transatlantic tech community and disrupted the language we use to describe how companies collect, use and transfer personal data. Over that period, despite much handwringing over penalties for non-compliance, we have seen very few enforcement proceedings brought against mid-sized or small US tech companies. Only time can tell whether the same will be true for the AI Act.